Can people tell the difference between human and AI writers and does this affect their trust in brands?

Artificial Intelligence (AI) can now comfortably create images, music, and text that could’ve been made by a talented human. The world of online content is seeing a huge shift in the 2020s. This is heavily impacted by the generative chatbot, ChatGPT, which is growing rapidly, hitting its first one million users in just five days.

While those interested in tech may know a lot about AI, and chatbots like ChatGPT, it’s not common knowledge for everyone. In fact, some people might not even know how sophisticated the output of AI tools is and if what they’re reading online was produced by a human or an AI.

To find out more on this topic, we surveyed over 1,900 Americans to see what they thought about AI content online, how it impacted their trust in brands, and ultimately if they could tell the difference between AI and human content. We asked people to guess whether text was created by AI or humans across health, finance, entertainment, technology, and travel content. Learn more about the methodology of this study here.

Here’s what the American public thinks of AI content online.

Key findings

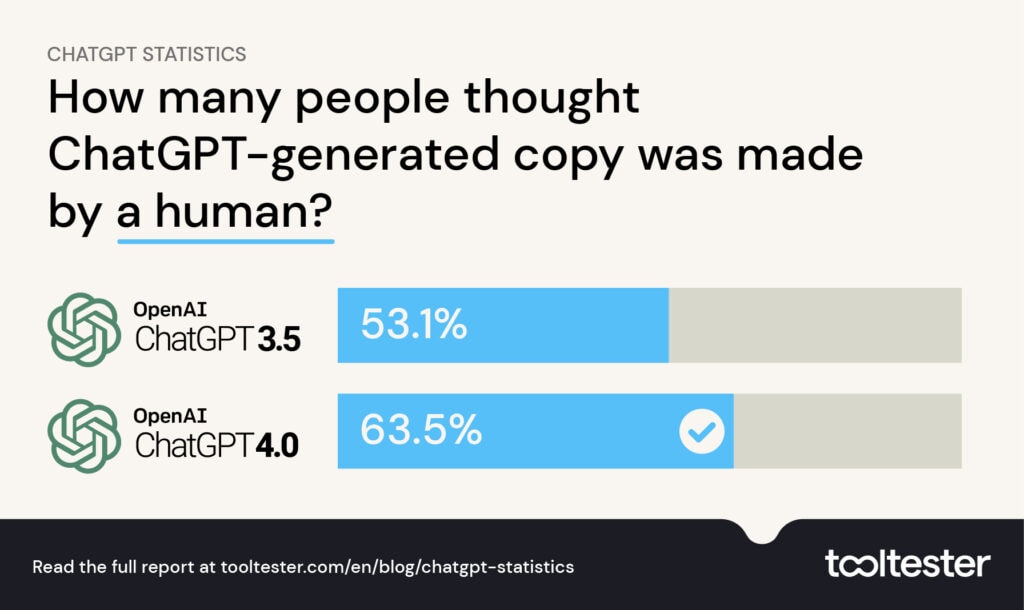

- Over 53% can’t accurately identify content purely made by AI chatbots, like ChatGPT. This rises to 63.5% when using the GPT-4.0 model.

- On average, the GPT-4.0 language model is 16.5% better than GPT-3.5 at convincing people AI-generated copy was written by a human.

- AI-generated health content was able to deceive users the most, with 56.1% incorrectly thinking AI content was written by a human, or edited by a human.

- Readers correctly guessed AI-generated content the most in the technology sector, the only sector where more than half (51%) correctly identified AI-generated content.

- With GPT-4.0, tech content was also correctly identified as AI-generated the most (60.3%).

- GPT-4.0 AI content was the most undetectable when it came to travel, with 66.5% of readers thinking the content was written by humans.

- Those who are more familiar with AI tools, like ChatGPT, were marginally better at identifying AI content, however, they were still only correct 48% of the time.

- Only 40.8% of people who were completely unfamiliar with generative AI could correctly identify AI content.

- The majority of people (80.5%) believe online publishers posting blogs and news articles should explicitly state if AI was involved in its creation.

- More than seven in ten (71.3%) said they would trust a brand less if they were given AI-generated content without being told so.

- Most people (46.5%) said they would be OK with AI advising them on health and financial topics, however, a separate 42.9% said they would only use such advice if a human had edited and reviewed the content.

Can people tell whether text is created by AI?

To answer the main question of this study quickly: no, people can’t tell the difference between AI content and human-written content.

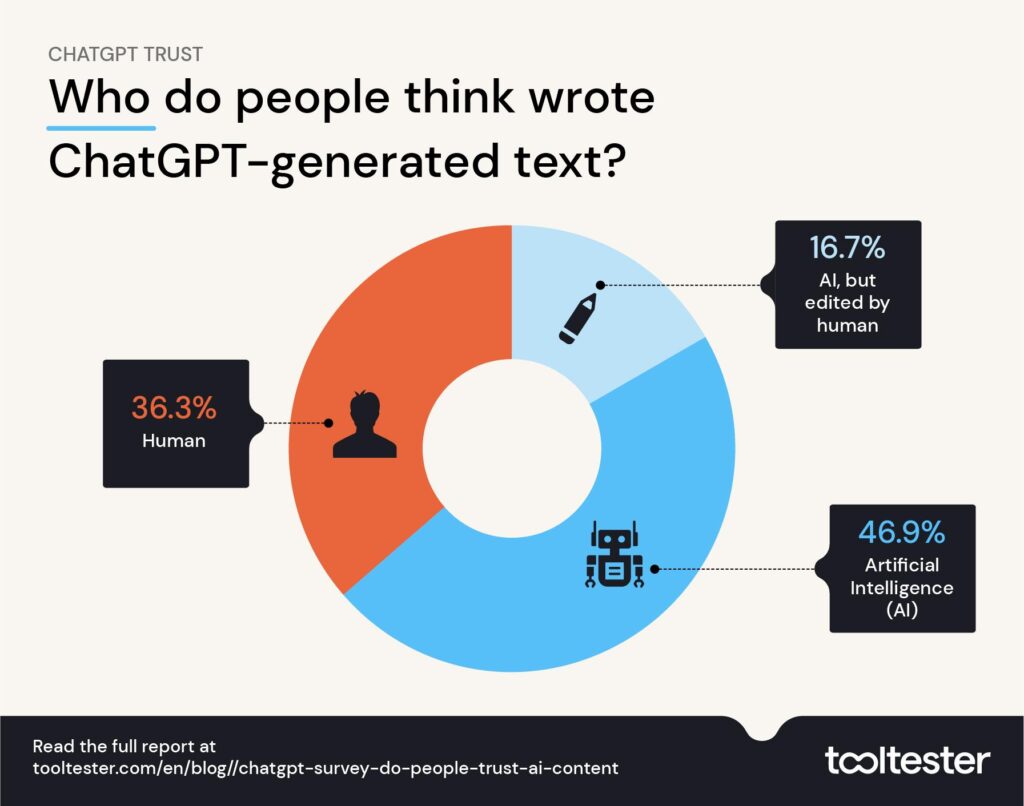

On average, people were only able to correctly identify AI-written content 46.9% of the time. When using GPT-4.0 instead of GPT-3.5, only 36.5% of readers were correctly able to identify AI-written content.

This varied between the topic of content they were reading, but generally, people could identify AI almost half of the time. This does mean that AI content is undetectable by more than half of readers.

However, digging into this further, we can see that 36.3% of people thought AI content was actually written by a human, while 16.7% thought it was AI content later edited by a human.

Ultimately, more than half (53%) read AI written content and assumed a human was involved at some point - that’s how convincing it can be straight out of the tool before a human writer has added any flair and personality.

GPT-3.5 vs GPT-4.0: Which model produces more convincing copy?

The original version of this study was undertaken before GPT-4.0 was launched on the 14th March 2023. After this, we updated our findings by polling readers once again.

We used the same topics and prompts, but this time surveyed respondents using content produced by ChatGPT with GPT-4.0 instead of the 3.5 model.

We found that there was an increase of 16.5% in the number of people who thought AI-generated content was made by humans, when we used GPT-4.0.

Using GPT-3.5, we found that 53.1% of people thought ChatGPT copywriting was human, whereas using GPT-4.0, we found 63.5% of people believed the content was created or edited by a human writer.

Is ChatGPT better at writing about certain topics?

One question this study sought to answer is whether ChatGPT is better at writing more human content based on different topics. From our findings, it appears the AI chatbot is better at writing more convincing health content and its technology writing is easier to spot by the general public.

Here’s an overview of how the AI content was perceived by the general public when generating text on different topics:

| Who wrote the ChatGPT-generated content? | |||

|---|---|---|---|

| AI | Human | AI, edited by a human | |

| Tech | 51.05% | 32.97% | 15.98% |

| Entertainment | 47.28% | 36.30% | 16.41% |

| Travel | 46.72% | 36.80% | 16.50% |

| Finance | 45.75% | 37.17% | 17.07% |

| Health | 43.94% | 38.40% | 17.70% |

As this study has since been updated, we can also compare the results of GPT-3.5 and GPT-4.0 when it comes to content on different topics. Just like GPT-3.5, when we used GPT-4.0, the language model was detected the most in technology content (39.7%).

Travel content was the most undetectable when it came to GPT-4.0, as 66.5% of readers believed it to be human or human edited. While health content was the most undetectable when it came to GPT-3.5 (56.1%).

How believable is ChatGPT-generated copy?

The following table reveals the percentage of people who thought ChatGPT-generated copy was made by an AI, a human, or edited by a human in both GPT-3.5 and GPT-4.0.

| GPT-3.5 | GPT-4.0 | GPT-3.5 | GPT-4.0 | |

|---|---|---|---|---|

| AI | AI | Human or Human-edited | Human or Human-edited | |

| Tech | 51.1% | 39.7% | 49.0% | 60.3% |

| Entertainment | 47.3% | 34.1% | 52.7% | 65.9% |

| Travel | 46.7% | 33.5% | 53.3% | 66.5% |

| Finance | 45.8% | 36.8% | 54.2% | 63.2% |

| Health | 43.9% | 37.8% | 56.1% | 62.2% |

Below we’ve broken down the sectors further so you can see how well ChatGPT produces human-sounding content on different topics.

The more familiar you are with AI tools, the more likely you can detect AI content… but only slightly

Those who said they were familiar with AI tools, like ChatGPT, were marginally better at finding AI content, however, they were still only correct 48% of the time.

This ability to identify AI writing drops by 7.2% to 40.8% when looking at people who stated they had never heard of generative AI, indicating people can gain the ability to see trends and patterns in AI-generated content.

Overall, there was no statistical difference between men and women in AI content detection.

How convincing is AI writing in different industries

Our study polled people on whether the content they were reading was made by an AI or not across five key sectors. The following is a breakdown of how people scored in each genre of content: entertainment, finance, travel, technology, and health.

Readers could spot AI in technology content the most

Our technology questions gave users answers to queries about cell phones, computer hardware, smart tech, AI, and internet providers.

On average, 51% of users were able to correctly guess whether the AI-written answers were definitely created by AI, while a third (33%) thought the same content was made by humans. The remaining 16% were unsure, guessing that it was a form of AI content later edited by humans.

Overall, technology content had the highest percentage (51%) of users who correctly identified the AI content. Women were slightly more likely to correctly identify AI-written tech content than men (52.4% vs. 49.9%).

When it came to human-written tech content, only 36% of people could identify that it was written by a human, with the majority (48.4%) convinced that AI had written it, the remaining 15.6% incorrectly thought a human had likely edited AI-written content.

AI-written entertainment content most likely to trick 18-24 year olds

When it came to entertainment writing, specifically, sections of text discussing movies, theater, video games, streaming, and music, 47.3% of respondents were able to identify AI content correctly. Those aged 18-24 were most likely to think AI-written content was written by a human (41.1%) compared to the overall average of 36.3%.

When it came to human-written entertainment content, a similar trend followed as a large majority (44.8%) thought it must have been written by an AI, and slightly fewer (38.9%) guessed it was a human writer correctly.

This confusion follows the general trend that people can’t quite tell the difference between AI and human-written content.

Travel

When it came to online travel writing, we tested respondents with content about finding affordable flights and hotels, preparing for outdoor travel, tips for hiring rental cars, and opinions on using travel agents.

At almost exactly average, 47% of people identified the AI text correctly, but 35.9% said it was written by a human.

Human-created travel content polarized readers a lot however. The majority (41.6%) guessed correctly that the text was written by a human and not an AI, however a similar amount (40.5%) of readers thought the same content had to be made by AI.

Finance

People were able to spot AI content well when it came to finance content, getting it correct around 45.8% of the time, however, 37.2% still thought that the same AI text had to be made by a human.

When it came to human-written content, 42.5% were confident it was written by an AI, while 40.5% correctly guessed it was created by a human mind.

AI-generated health content managed to deceive 53.1% of users

When it came to health content, we gave users writing about hip replacement costs, dangers of paracetamol, mental health conditions, fitness plans, and preventative health screenings.

In this case, the highest portion of readers in the study (38.4%) thought the AI content was written by a human, while 43.9% were confident it was AI. The remaining 17.7% believed it was human-edited AI text.

Health content created by humans and reviewed by medical professionals didn’t win readers around. Generally, human-made content in this area had most people (44.9%) thinking it was generated by AI, while 37.9% thought a human created it. Interestingly, this means slightly more people thought the AI health content was more human than actual human-written content.

Out of the five sectors we reviewed in this study, health content generated by AI confused users the most. This could be very dangerous as we move toward a world where AI will likely insert itself into more parts of our lives, including healthcare.

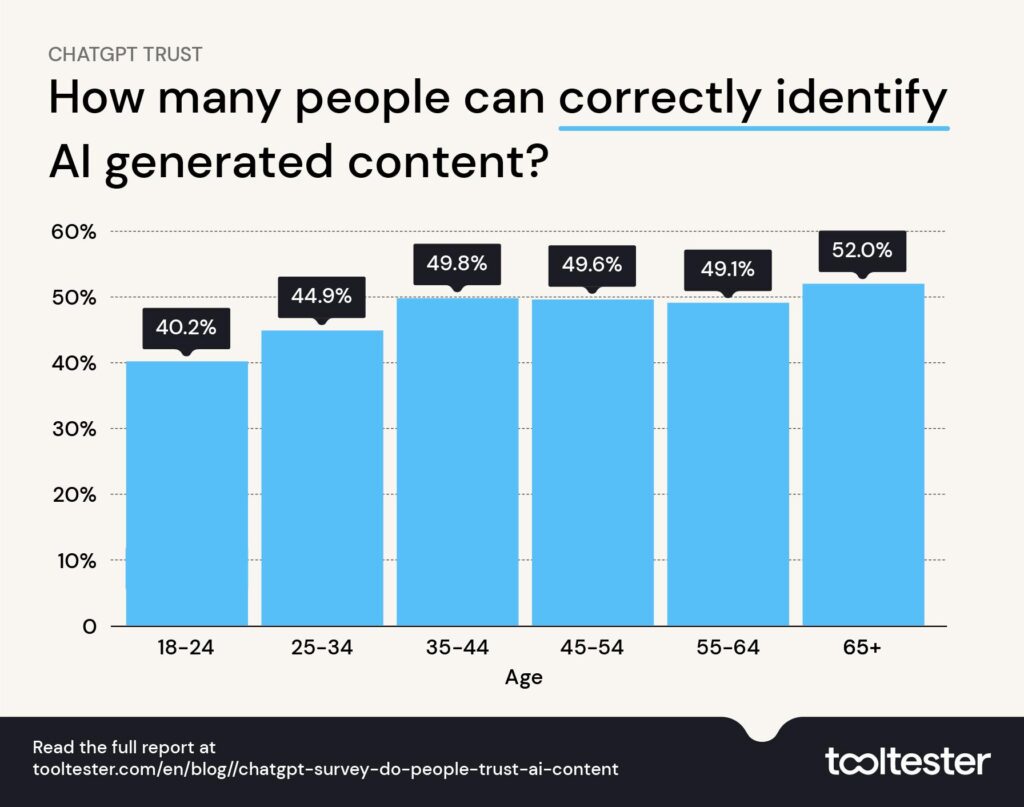

Younger people struggle more with identifying AI content

Across the study, generally, the youngest respondents were the poorest at identifying AI-written content, with only 2 in 5 (40.2%) of 18-24 year olds guessing correctly. While those aged 65+ were more cynical and correctly identified AI content more than half of the time (52%).

Do people trust AI-written content?

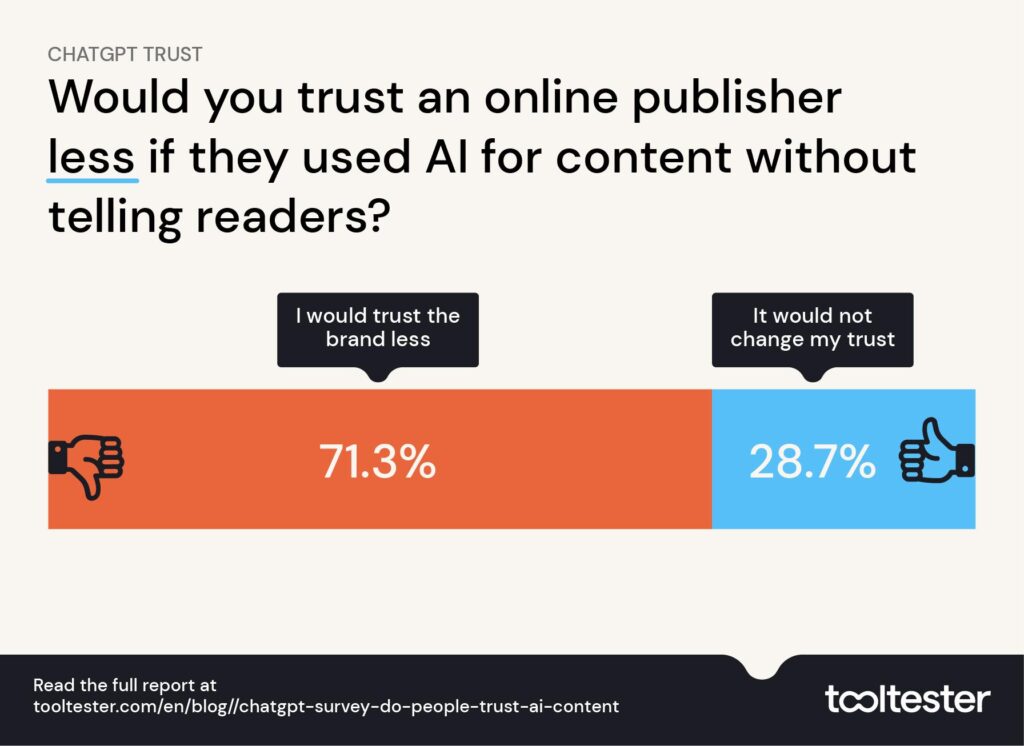

If content publishers, such as bloggers, newspapers, and magazines were to publish AI content without telling users (which some have done, sometimes even full of errors) we wanted to know what the everyday reader would think about this.

The majority of our respondents (80.5%) said they think AI disclosures should be the norm online and publishers should have to make people aware.

It is then unsurprising that 71.3% also said they would trust a brand less if it published AI content without explicitly saying so. The remaining 28.7% said it would not affect their trust in a brand however, indicating that perhaps not everyone needs to be told where their online content comes from.

When it came to the impact these disclosures may have on readers, the story was similar. A slightly smaller majority (67.8%) said they would trust a brand more if AI disclosures were present on a piece of online content, while just under a third (32.2%) said it wouldn’t impact their trust positively or negatively.

Overall, the data indicates that most people would favor brands that explicitly reveal how and where AI has been used to create their content. Whether this becomes the norm in the world of online content is yet to be seen.

Methodology

1,920 American adults across all age ranges were surveyed and asked to decide whether a piece of text was created by AI, a human, or AI and edited by a human. This was asked across 75 pieces of unique text, and 3,166 responses were collected for this analysis. Survey data was collected from 2/20/2023 to 2/26/2023.

Survey respondents were asked how familiar they were with AI and AI content. The majority of people in this survey had tried some form of AI tool at least once, this includes ChatGPT, but may not be ChatGPT.

- 57.1% of our audience had tried some form of generative AI tool at least once

- 41.1% had heard of it in some form but never used them personally

- 1.8% had never heard of any generative AI before taking part in the survey

25 questions were used in the analysis with three answers per question: one from AI (ChatGPT), one written by a human journalist, and another created by AI then edited by a human professional copywriter. Questions and answers were completely randomized across users so they could not see more than one answer per question.

The content was chosen by selecting highly-searched (determined by Google search volume) questions in the entertainment, finance, technology, travel, and health sectors.

ChatGPT was given prompts to write as an expert on the relevant topic (for example a travel journalist, or a financial manager) and explain answers “simply”. Content was removed from the AI answer if it made it obvious it was written by an AI, such as “OK I will pretend to be a finance manager and this is what I would say”. Human-written content was sourced from expert sites that had made in-depth content on the respective question. Any sites that had disclosed the usage of AI in their content were not used for this analysis. Examples of the questions and responses given to survey respondents can be found here.

GPT-4.0 Update to Study

To assess the capabilities of GPT-4.0 in ChatGPT, we surveyed 1,394 American adults between the 22nd March and 25th March 2023. They were asked the same questions as to whether they thought a text was produced by an AI, a human, or edited by a human. The topics and queries were the same as the GPT-3.5 study, examples are given in the above spreadsheet.

Other AI Resources

You can even use AI to help you create a website these days! Check out our guide to the best AI website builders for more information.

This work is licensed under a Creative Commons Attribution 4.0 International License.

We keep our content up to date

31 Mar 2023 - Results for ChatGPT 4 survey added

THE BEHIND THE SCENES OF THIS BLOG

This article has been written and researched following a precise methodology.

Our methodology